3.3.3 Learn from the algorithm itself about it’s decisions¶

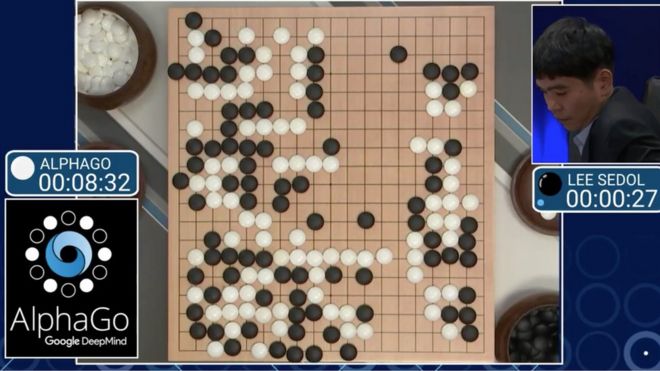

Interpretability can help humans to learn from machines too. In 2016, AlphaGo played a mysterious move against Lee Sedol, the world’s top player for AplhaGo, which completely stunned everyone. The move didn’t make sense to any human present in the room. 1

Three times European Go Champion, Fan Hui was gobsmacked by the move too.

This historic incident concludes that human can learn from machines too provided we know how the system works and and reason for its success. In areas like Reinforcement Learning, interpretability can help a lot in understanding the decisions made by algorithms.

Recently, OpenAI released a clever hide and seek game based on 2 teams of baby RL agents. One of the team needs to find the next team. The arena has random objects placed. Through Reinforcement Learning, the agents learned to exploit the objects by moving them and made use of physics during their time in the game. Most of the moves we not taught to the agent and through self understanding and learning, it learnt to exploit the bugs in the game and break the rules. 2

Citations